Vhysio

PUBLIC

United Kingdom, Durham University

Project Overview

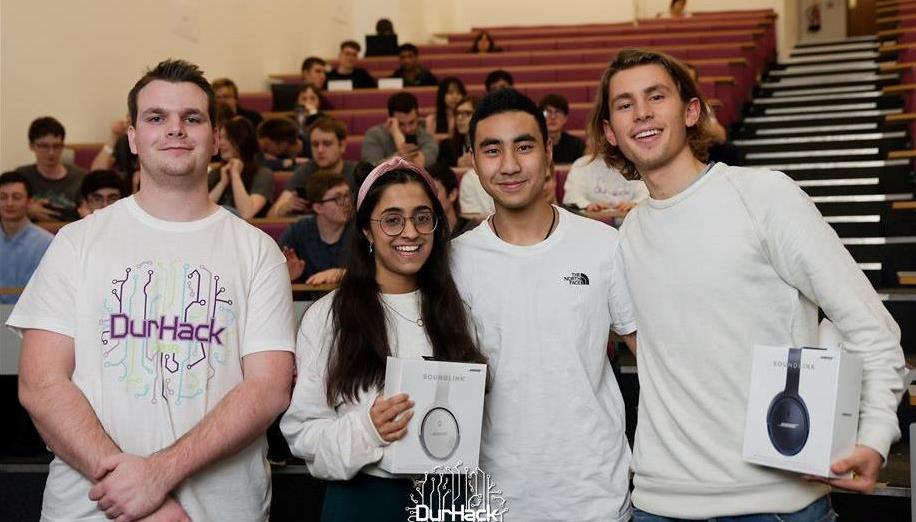

1st Place Winners of DurHack 2019

(Durham University Hackathon)

Access the live application at: https://vhysio.herokuapp.com/

View our presentation slides at:

http://bit.ly/2OEQrWv

Our code is available on Github: https://github.com/BenHarries/Durhack2019

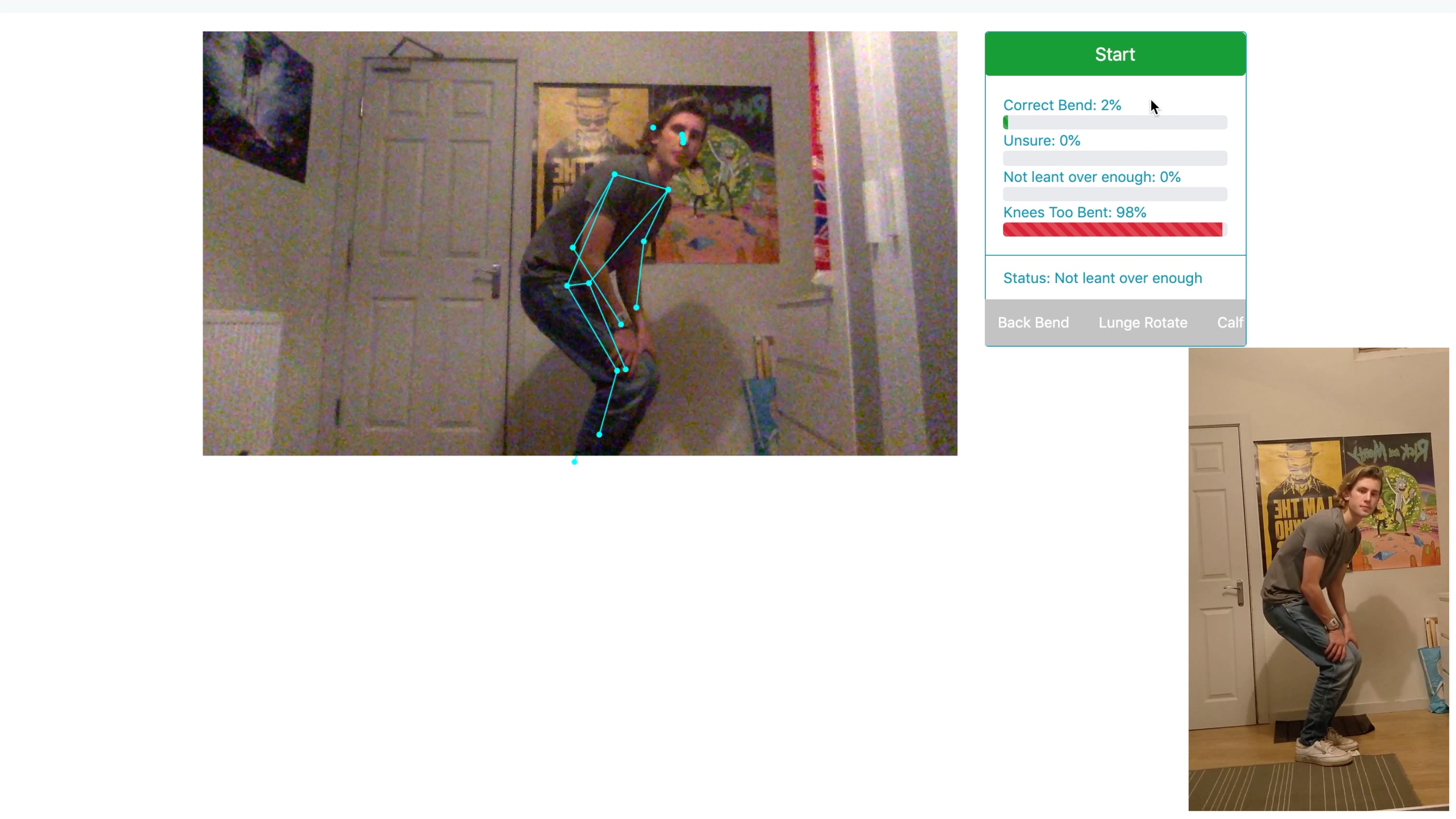

Vhysio is a machine learning web app utilising cutting edge in browswer deep learning with, tensorflow.js to enable accessible physiotherapy for the Visually Impaired, talking through exercises by responding to users' postures in real-time.

Vhysio makes it easier for users to not only complete but to improve their techniques independently.

Technology

We built Vhysio in 24hrs and pitched it to over 200 people including leaders in industry. We are in the process of migrating our web app to Azure.

Vhysio utilises Machine Learning to say what makes a particular position correct and incorrect. For each pose it has been trained on a dataset of images to predict whether the position is correct, or incorrect - and what makes it so and guides the user using voice.

We have used 'TeachableMachine' A web-based AI Machine Learning tool to train our models in the various physiotherapy poses.

Google's Speech-to-Text API was also used to enable the application to be accessible by the visually impaired. The user can start their exercises via speech remotely this is more convenient and easier to use for our target audience.

The application utilises Windows WebKit Speech Recognition, for text-to-speech. This is useful for the visually impaired as they can hear if they are in the right position as the application will tell them to adjust their posture if incorrect.

We also use the webcam to track the user's movement which is fed as input to the machine learning model and outputs a status on the user's posture.

About Team

Our team consists of three second years pursuing the goal of building tools, using cutting edge technology such as Machine Learning and Microsoft Azure, to produce social impact. So far all three of us have gone above and beyond in our field for our age. We have all completed work experience prior to the usual penultimate year summer internships whilst all achieving first class results (4.0 gpa equivalent) in Computer Science, being awarded prizes for outstanding academic performance.

Alisa Hussain, 19, worked at CISCO for a week shadowing different teams and developing a cutting - edge technological idea in a team, and pitching this to industry experts. She has always been interested in Artificial Intelligence and was excited to apply this in her first hackathon, Durhack.

AJ Sung, 19, has worked on many projects mainly in the cyber security space. He has been flown out to 5 different Hack The Box events around the world as crew. He has interned at AoY Labs where he was a chatbot developer utilising NLP and Node.js.

Ben Harries, 19, has previously interned at one of the fastest growing healthtech startups, MedShr. Here he was a full stack developer and finishing his main project early meant he trained two deep learning models using pytorch that are about to be shipped to over 800,000 users.

The hackathon took place 23rd-24th of November 2019 and the idea came from thinking how we could apply machine learning to produce a tool for the visually impaired. Ben's mother is a Physiotherapist and we thought how we could make something that would allow her patients that were visually impaired to carry out their stretches at home to improve the process of recovery for both parties.

We learnt tonnes but still have so much further to go but the success at Durhack has spurred us on to create something that deeply solves the users needs.

Technologies we are looking to use in our projects

Azure

Javascript

Machine Learning

Voice Assistance